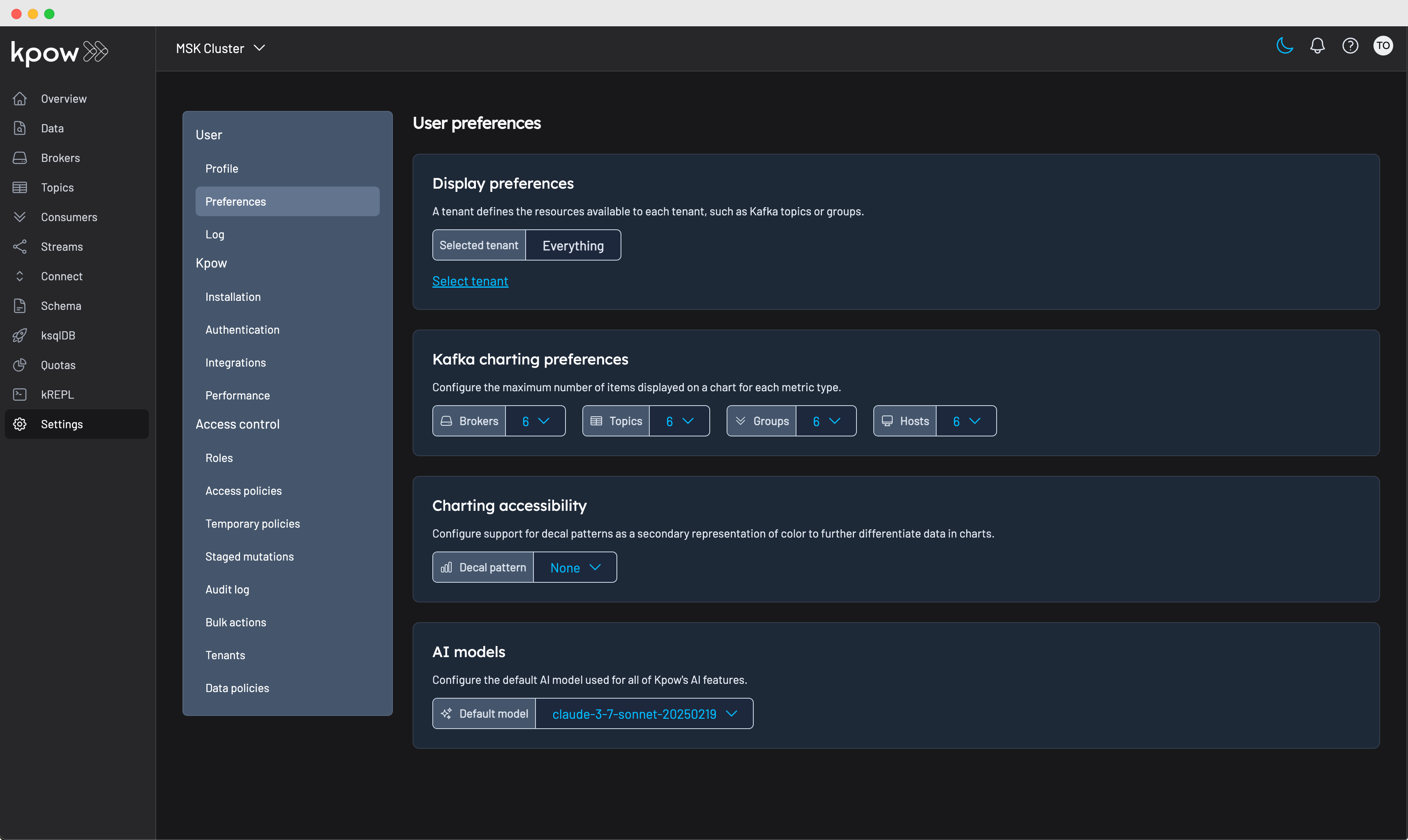

AI models

Kpow supports 'bring your own' (BYO) AI Models for optional AI features and MCP tool use within the product.

Configuration

You can configure one or more AI model provider to use within Kpow. Within your user preferences you can set the default model to use when using Kpow's AI functionality.

AWS Bedrock

Kpow supports integration with Amazon Bedrock for AI/LLM use cases.

To enable Bedrock models, set the following environment variables:

| Variable | Required | Default | Description |

|---|---|---|---|

BEDROCK_MODEL | Yes | — | Model ID to use from Bedrock. See available model IDs. |

BEDROCK_REGION | No | us-east-1 | AWS region where the Bedrock model is hosted. |

Example configuration

export BEDROCK_MODEL="amazon.nova-lite-v1:0"

export BEDROCK_REGION="us-east-1"

Example IAM policies for Kpow

To access an Amazon Bedrock model, the minimum required IAM policy must grant the bedrock:InvokeModel permission. You can create policies that are highly specific, restricting access to a particular model in a specific AWS region, or you can use wildcards for broader access.

A restrictive IAM policy allows an identity (like a user or role) to invoke only a designated model in a single region. This aligns with the security best practice of granting least privilege.

Here is an example of a policy that allows invoking a specific model in a designated region:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"bedrock:InvokeModel"

],

"Resource": "arn:aws:bedrock:us-east-1::foundation-model/amazon.nova-lite-v1:0"

}

]

}

For scenarios requiring more flexibility, you can use wildcards (*) in the Resource ARN. This allows access to multiple models or regions without needing to list each one individually.

This example policy permits invoking any Bedrock model in any region:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": "bedrock:InvokeModel",

"Resource": "arn:aws:bedrock:*::foundation-model/*"

}

]

}

It is important to note that if you encounter an AccessDeniedException when trying to invoke a model, it could be due to several reasons, including a lack of model access in your account for that region or insufficient IAM permissions.

OpenAI

Kpow supports integration with OpenAI for AI/LLM features such as filter generation and other natural language tools.

To enable OpenAI models, set the following environment variables:

| Variable | Description | Default | Example |

|---|---|---|---|

OPENAI_API_KEY | Your OpenAI API key | (required) | XXXX |

OPENAI_MODEL | Model ID to use | gpt-4o-mini | o3-mini |

See this page for a list of supported OpenAI models.

Example configuration

export OPENAI_API_KEY="XXXX"

export OPENAI_MODEL="o3-mini" # default gpt-4o-mini

Anthropic

Kpow supports integration with Anthropic for AI/LLM use cases.

To enable Anthropic models, set the following environment variables:

| Variable | Description | Default | Example |

|---|---|---|---|

ANTHROPIC_API_KEY | Your Anthropic API key | (required) | XXXX |

ANTHROPIC_MODEL | Model ID to use | claude-3-7-sonnet-20250219 | claude-opus-4-20250514 |

See this page for a list of supported Anthropic models. Kpow supports any model from the Anthropic API column.

Example configuration

export ANTHROPIC_API_KEY="XXXX"

export ANTHROPIC_MODEL="claude-opus-4-20250514" # default claude-3-7-sonnet-20250219

Ollama

Kpow supports integration with Ollama for AI/LLM use cases.

To enable Ollama models, set the following environment variables:

| Variable | Description | Default | Example |

|---|---|---|---|

OLLAMA_MODEL | Model ID to use | — | llama3.1:8b |

OLLAMA_URL | URL of the Ollama model server | http://localhost:11434 | https://prod.ollama.mycorp.io |

Note: we only support Ollama models that support tools.

Example configuration

export OLLAMA_MODEL="llama3.1:8b"

export OLLAMA_URL="https://prod.ollama.mycorp.io" # default http://localhost:11434

Missing AI provider?

Contact support@factorhouse.io to request support for your AI model.

AI features

kJQ filter generation

Transform natural language queries into powerful kJQ filters with AI-assisted query generation. This feature empowers users of all technical backgrounds to extract insights from Kafka topics without requiring deep JQ programming knowledge.

How it works

Simply describe what you're looking for in plain English, and the AI model generates a syntactically correct kJQ filter tailored to your data. The system leverages:

- Natural language processing: Convert conversational prompts like "show me all orders over $100 from the last hour" into precise kJQ expressions.

- Schema-aware generation: When topic schemas are available, the AI optionally incorporates field names, data types, and structure to create more accurate filters.

- Validation integration: Generated filters are automatically validated against Kpow's kJQ engine to ensure syntactic correctness before execution.

Usage

Navigate to any topic's Data Inspect view and select the AI Filter option. Enter your query in natural language, and Kpow will generate the corresponding kJQ filter. You can then execute, modify, or save the generated filter for future use.

The AI filter generator works best when provided with specific, actionable descriptions of the data you want to find. Include field names, value ranges, example data and logical operators in your natural language query for optimal results.

AI feature notes

- We cannot control how AI providers process your data. For sensitive data, use local models or enterprise AI services with verified privacy guarantees.

- AI-generated filters are probabilistic and may miss edge cases. Provide detailed context and examples for more accurate results.

- We recommend models with capabilities equivalent to

gpt-4o-miniorclaude-3-5-sonnet-20241022or newer.